BACKGROUND

This is a research project I did during my graduate study in China. It is a robot telecontrol system with binocular vision in an enhanced reality environment. The enhanced reality environment is to mimic the remote environment where the robot is. The operator could manipulate a virtual robot in this enhanced reality environment and then send the instruction to the real remote robot.

By manipulating the virtual robot in a virtual reality (VR) environment which is a copy of the real working environment, the operator could repeat a certain task as many times as he/she wants until he/she finds the optimal way.

I designed and developed the virtual reality environment by using Photoshop, 3ds MAX, C++ and Coin3D.

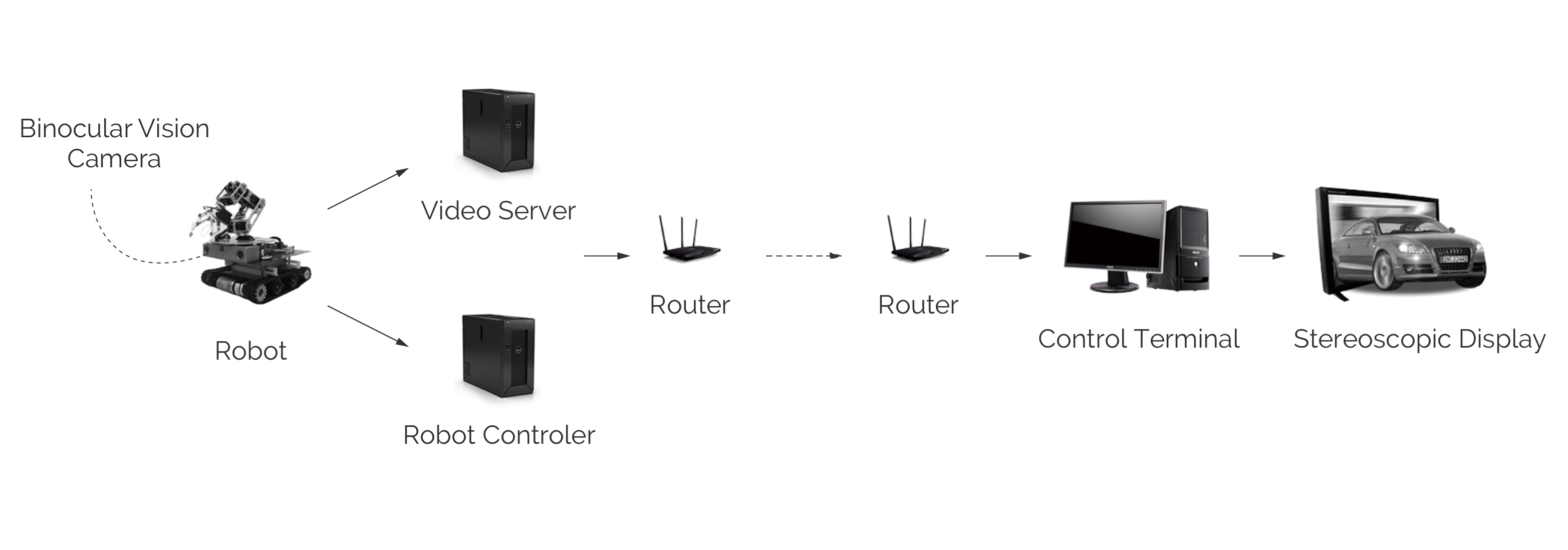

SYSTEM ARCHITECTURE

Our system has three main modules, the robot module, the robot control and video server module, and the control terminal.

The binocular vision camera equipped on the remote robot transfers binocular video information to the control terminal. A VR environment is built on this binocular video. A virtual robot, 1:1 scale of the real robot, will be trained to implement specific tasks, like grasping an object. Once the virtual robot correctly grasps the object of interest, its action parameters will be recorded and then sent to the real robot at the remote site where a real grasp action will be carried out.

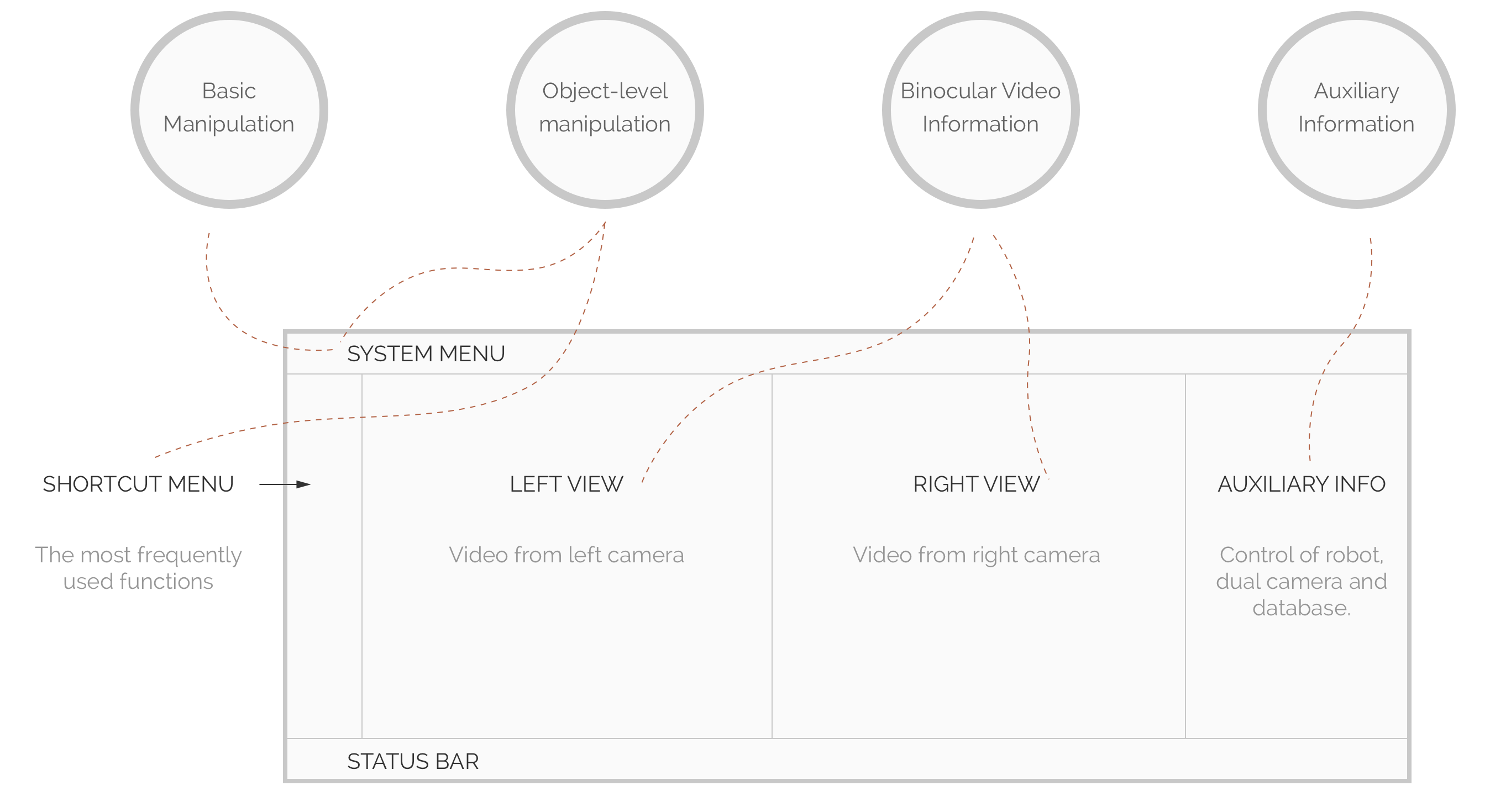

INFORMATION ARCHITECTURE

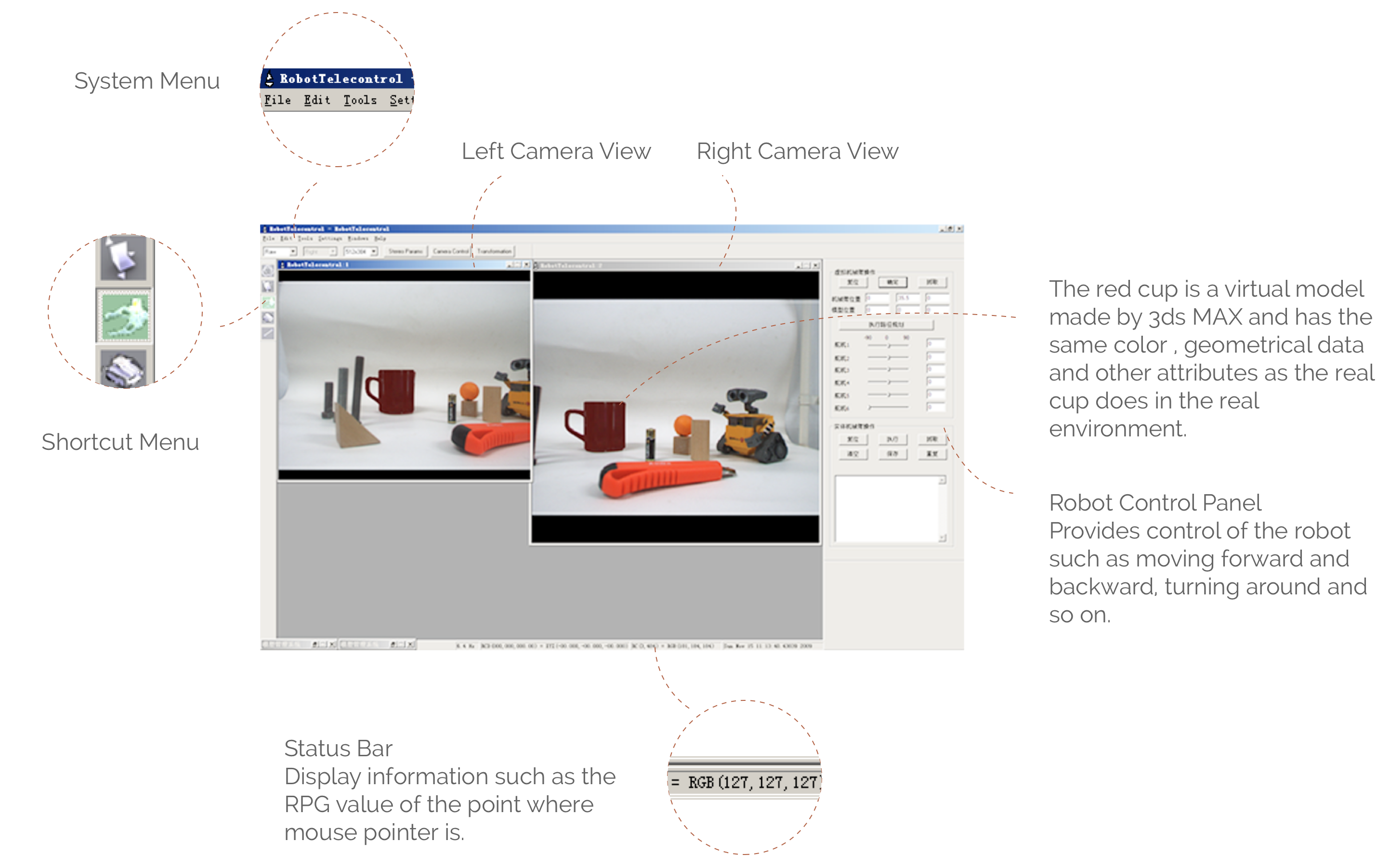

I classified all the information into four categories: Basic Manipulation, Object-level Manipulation, Dual Video Information and Auxiliary Information. Then I designed the layout of user interface which contains six parts shown below.

Basic Manipulation

The most fundamental and system-level operations such as system initialization, camera control, database management and so on.

Object-level Manipulation

Object-level Manipulation especially used for operating virtual objects. We can precisely control the behavior of the virtual object and manage enhanced information such as color, volume and so on.

Binocular Video Information

It provides the real world images as the background of the virtual-reality environment.

Auxiliary Information

Used for control robot, dual camera and manage database.

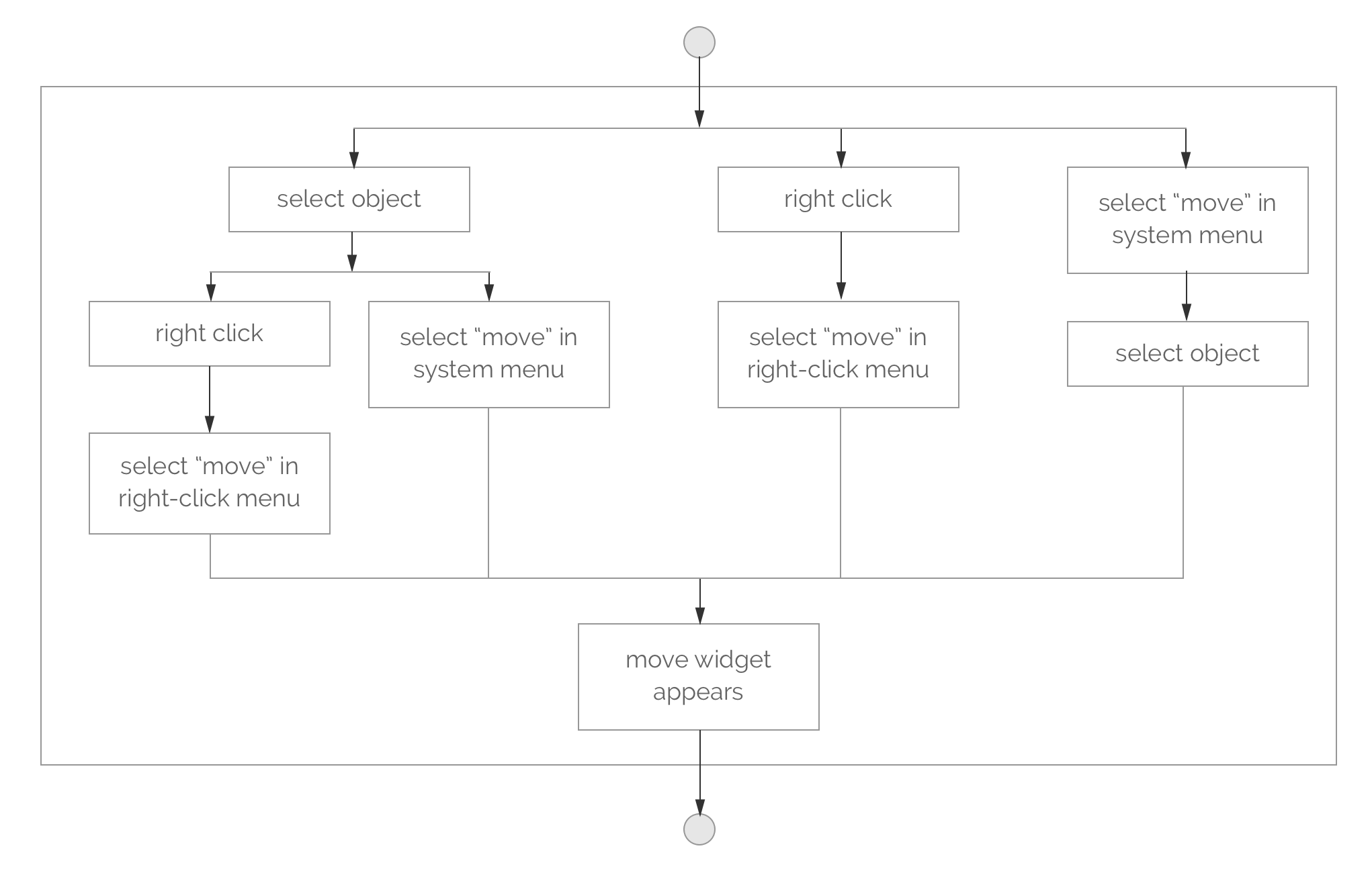

WORK FLOWS

Having the main framework finished, I began to design the working flows of the key functions. Below shows one of 20 core working flows.

Operation Flow of Moving Object

USER INTERFACE

When information architecture been confirmed, I put all efforts on the user interface design and coding work. I used photoshop to design the interface and realized it with the Microsoft Foundation Class Library (MFC).

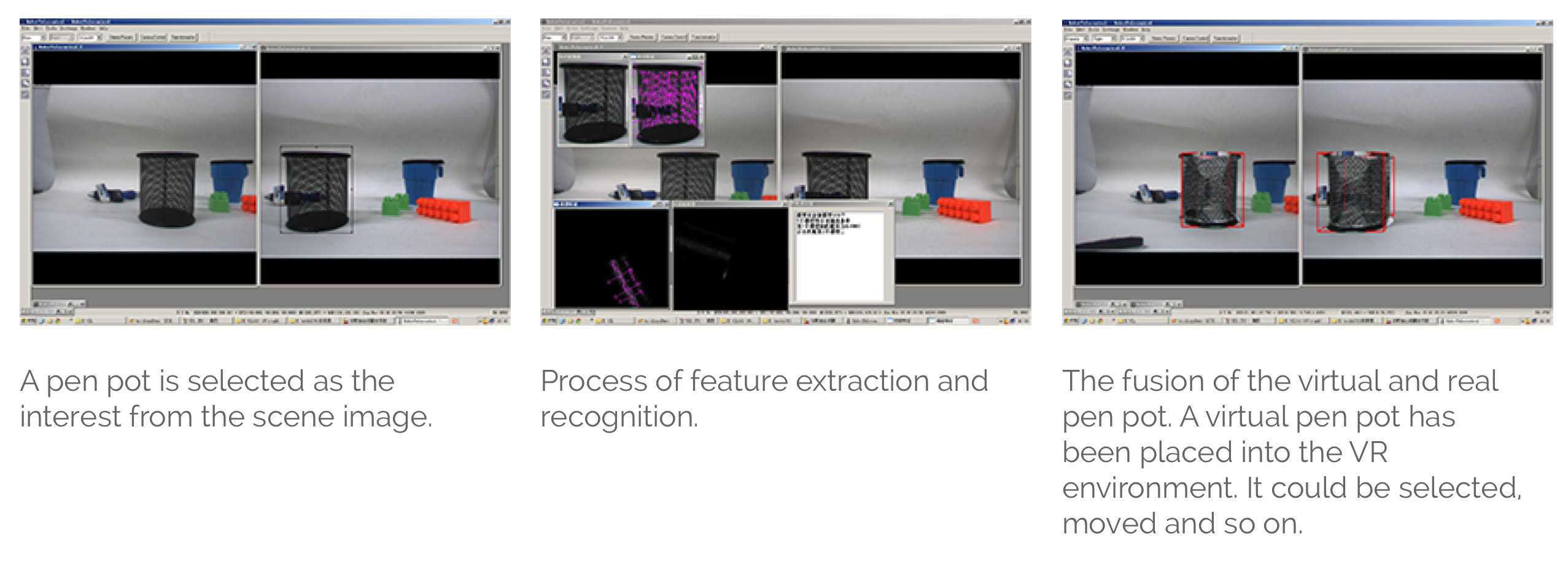

OBJECT RECOGNITION AND OPERATION

To simulating object operation in remote real environment, we need first replace the real object with a virtual one by selecting target object in the video stream.

Object Recognition

With the help of the binocular vision video, we could obtain the coordinates of the real object and then replace it with the same virtual one from our object repertoire.

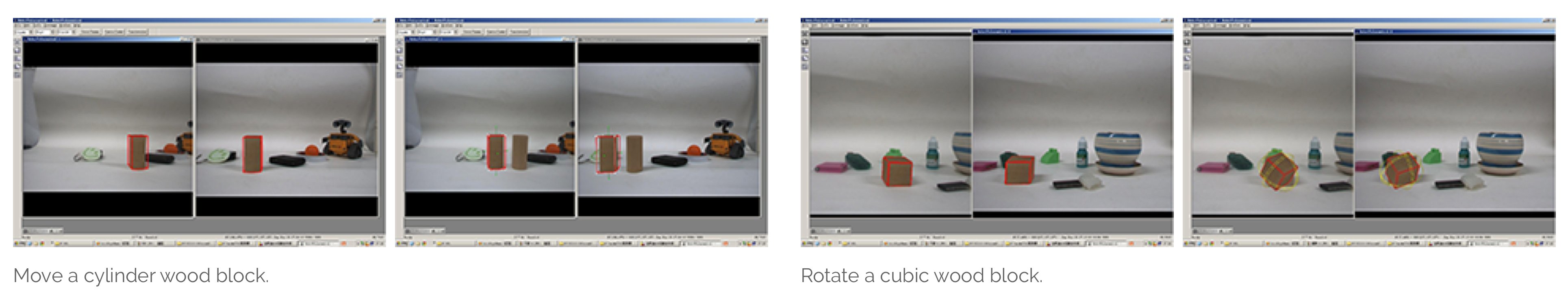

Object Manipulation

Once a virtual object is introduced in VR, it could be operated by moving, rotating, zooming and so on.

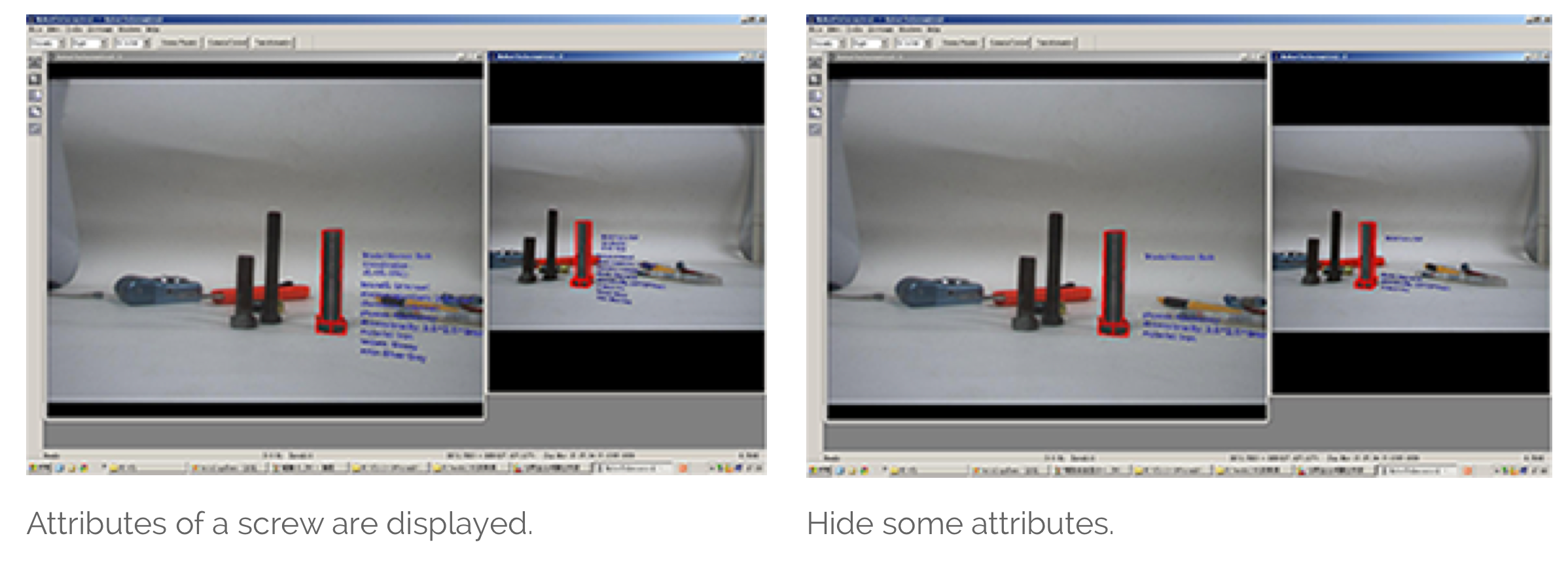

Enhanced Information

By selecting the virtual object, we could see enhanced information including the value of color, height, width, material, coordinates and so on.